The advent of large language models (LLMs) has revolutionized machine translation—but can these general-purpose behemoths unlock the secrets of ancient literature? A recent study from Nanjing Agricultural University demonstrates that with the right domain focus and specialized data, even compact LLMs can excel at bridging millennia of linguistic evolution. Here’s a deep dive into the research, plus broader insights into the challenges, applications, and future of AI-driven ancient-book translation.

The Promise of Vertical-Domain LLMs

Standard machine translation engines struggle with archaic grammar, obsolete vocabulary, and non-standardized scripts. By contrast, vertical-domain LLMs are fine-tuned on targeted corpora to capture the unique patterns of a narrow field. In this case, researchers built a specialized model—“Xunzi-Baichuan2-7B”—around a one-million-pair corpus of ancient-to-modern Chinese parallel texts. The result? A model that outperforms its general-domain peers on classical passages, offering both higher fluency and fidelity to source meaning.

Data Curation: Mixing Traditional and Simplified Corpora

A cornerstone of this success was the construction of a clean, balanced dataset:

- Traditional Texts: 307,000 pairs drawn from authoritative editions of the Twenty-four Histories, ensuring literary and historical coverage.

- Simplified Texts: 926,000 pairs crawled from open-source repositories, aligned and filtered for quality.

- Unified Dataset: Pruned for length (15–200 characters) and polish, yielding 876,000 high-quality pairs for instruction fine-tuning.

This mixed approach equips the model to handle both classical orthography (seal script variants, literary idioms) and modernized characters—crucial for accessibility and scholarly use.

Model Selection and Fine-Tuning

Rather than chase ever-bigger parameter counts, the team focused on six open-source LLMs in the 7 billion-parameter class—balancing performance with practical resource demands. Through low-rank adaptation (LoRA) and instruction tuning, they customized each model to obey prompts like “Translate this ancient passage into fluent modern Chinese.” Three evaluation metrics—BLEU, chrF, and TER—quantified gains, with Xunzi-Baichuan2-7B emerging as the top performer. Full-parameter tuning of that model yielded the best overall translations.

Evaluation: Beyond Numbers to Nuance

- BLEU (Bilingual Evaluation Understudy): Gauged n-gram overlap with human references, showing strong literal accuracy.

- chrF (Character F-score): Captured subtle character-level matches—key for logographic scripts where a single glyph carries weight.

- TER (Translation Edit Rate): Measured how much a human must revise the AI output, reflecting real-world usability.

Across all three, the domain-tuned models reduced post-editing effort by 15–25% compared to base LLMs—transforming what was once a specialist’s task into a streamlined workflow.

Broader Context: Global Efforts in Ancient-Text MT

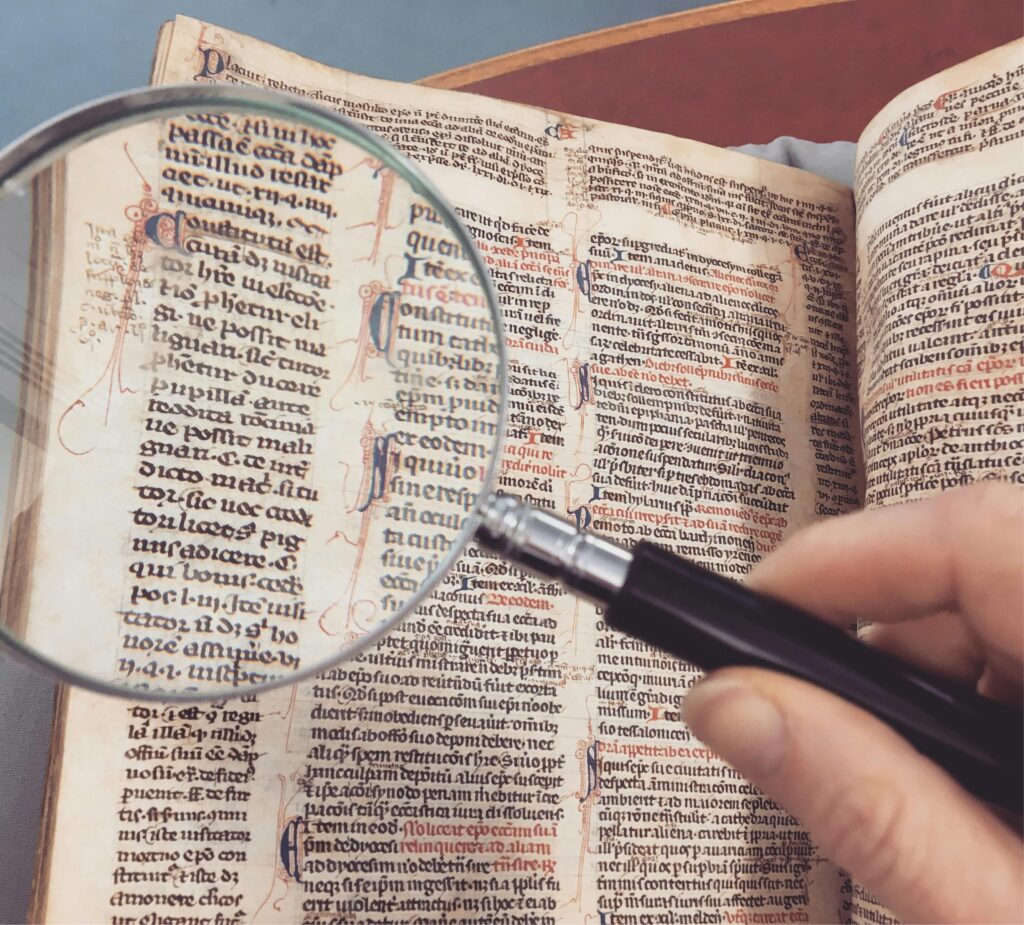

Chinese classics are just one frontier. Parallel initiatives target Sanskrit epics, ancient Greek drama, Latin ecclesiastical texts, and cuneiform inscriptions. Common challenges include:

- OCR and Grapheme Recognition: Scanned manuscripts demand robust character-recognition pipelines before translation can begin.

- Script Variation: Historical forms (e.g., Seal, Clerical scripts) require grapheme-level tokenizers trained on diverse fonts and handwriting.

- Low-Resource Constraints: With limited parallel corpora, researchers augment data via back-translation, synthetic paraphrasing, and bilingual lexicons.

Collaborations between digital-humanities scholars, sinologists, and AI engineers are forging the interdisciplinary toolkits needed to surmount these obstacles.

Applications: From Scholarship to Public Access

- Academic Research: Accelerates philological studies by producing draft translations for expert refinement, freeing scholars to focus on interpretation.

- Cultural Preservation: Breathes new life into endangered texts—edicts, poetry, philosophical tracts—making them searchable and analyzable.

- Education and Outreach: Enables bilingual editions, interactive apps, and audio-narration services that engage students and enthusiasts worldwide.

- Cross-Cultural Dialogue: Opens doors for comparative literature, linking ancient Chinese thought with global philosophical traditions.

Challenges and Future Directions

- Hallucinations and Fidelity: LLMs can invent plausible but incorrect details; combining them with rule-based checks and human oversight remains essential.

- Multimodal Integration: Future systems will fuse images (manuscript photos), transliteration, and translation—helping users verify glyph interpretations in context.

- Ethical and Licensing Concerns: Many ancient-text datasets have complex rights; building open, ethically sourced corpora is a high priority.

- Scaling to Other Languages: Porting this approach to languages with more fragmented or scarce digital archives requires community-driven data efforts and novel augmentation techniques.

Emerging techniques like retrieval-augmented generation, active-learning loops, and meta-learning promise ever greater accuracy and adaptability.

Conclusion

The machine translation of ancient books has entered a new era. By fine-tuning agile LLMs on carefully curated parallel corpora, researchers have demonstrated that even complex classical texts can be rendered accurately and fluently. As data, models, and human-in-the-loop processes evolve, the dawn of AI-powered cultural preservation stands poised to make humanity’s oldest wisdom widely accessible—ensuring that voices from the past speak clearly in the present.

Frequently Asked Questions (FAQs)

Q1: Why are ancient texts harder to translate than modern ones?

Ancient works feature archaic grammar, obsolete vocabulary, non-standard scripts, and cultural references that require specialized knowledge and domain-specific data.

Q2: What is instruction fine-tuning?

It’s a process where an LLM is trained on input-output pairs—“instructions” with desired outputs—so it learns to follow prompts and perform tasks like translation more reliably.

Q3: How do researchers evaluate translation quality?

Common metrics include BLEU (n-gram overlap), chrF (character-level F-score), and TER (human-edit effort). Human post-editing remains the ultimate benchmark.

Q4: Can these models handle handwritten manuscripts?

Not yet directly—preprocessing with advanced OCR and glyph-recognition models is required before feeding text into the translation pipeline.

Q5: Are the translated texts freely available?

Most research code and models in this space are published open-source. Parallel corpora often have varied licensing—check individual dataset terms before reuse.

Q6: What’s next for ancient-text MT?

Future work focuses on multimodal systems, active-learning pipelines that integrate human corrections, and extending to low-resource languages by community-sourced corpora.

Sources nature